Have you heard of the term Googlebot? When someone mentions it, most people automatically think of a cute little robot who is on a quest to travel the web and inspect various websites. Sadly, it’s just a computer program – but what is Googlebot exactly and what does it do? If you’re curious to find out, stay with us – we’ll go into details to ensure you know all you need to know about this topic to improve your SEO knowledge.

What Are Web Crawlers and How Do They Work?

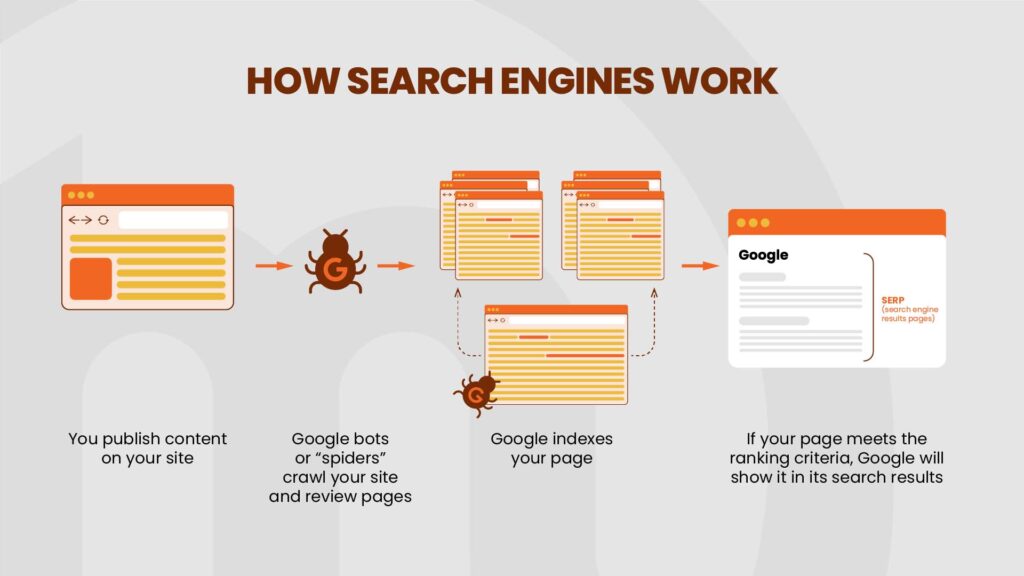

To start off, let’s go through what web crawlers are. Web crawlers, also called spiders or bots, are automated programs designed to browse the internet and collect data from websites. These programs work by crawling through pages – then they download the content so it can be stored in a database of a search engine. This is known as the indexing of websites.

Bots can also monitor changes and collect data for analysis. Different search engines have different web crawlers. Since Google is the predominant search engine most of us use, it’s important to know which crawler it uses – it’s Googlebot.

What Is Googlebot?

So, how do crawlers relate to Googlebot? Well, Googlebot is Google’s web crawler – it crawls web pages via links and collects data. Its purpose is to find and read new content and suggest what to add to the index – in other terms, what to add to Google’s brain. This way, it builds a searchable index of the web. You can think of this as a large library – and crawling and indexing refer to updating the inventory. Additionally, the crawler can monitor website changes and gather necessary data for data analysis.

Googlebot is an automated software program that works in both desktop and mobile settings. It can compile over 1 million GB of data in less than a second. Once this data is indexed, the search results from your query are pulled directly from this index. It’s easy to see how this crawler is essential for the never-ending expansion of information that Google possesses.

Why Is This Important for SEO and Your Website Traffic?

If you think about it, the correlation is quite easy to spot. When the bot crawls your website, collects data, and indexes it, it decides where you’ll be ranked on search engine results pages (or SERPs) among similar content (of course, we still have to consider Google’s ranking factors here). This is precisely why understanding this bot is crucial for optimizing your website and building your SEO strategy. We all know that SEO is important – the way you optimize your pages can affect how efficiently the crawler can collect the data for indexing.

Googlebot is one of the main programs that keep Google working. If the bot doesn’t crawl and index the website, it doesn’t exist in the eyes of Google – therefore, it can’t be accessed by users, which means it is practically useless.

How Does Googlebot Work?

How does Googlebot see your website? Google has been quite open about the indexing process, which has stayed fairly consistent over the years. The first step for a bot is to find out which pages exist on the web – there isn’t a registry of all pages out there, so Googlebot has to search for them and add them to the list of pages that are known. This is called URL discovery. How does this work? Usually, the bot follows a link from a known page to a new one. For example, this happens when you add a new blog post on your website.

When the page is discovered, it will be added to the list of those that need to be crawled. So, Googlebot follows the pipeline that starts from a link – the one that was discovered during previous crawls – and then it crawls the page and processes information.

If it finds new links, the bot adds them to the list of links that need to be visited next. If it finds some changes in the page or broken links, it takes note of this so the index can be updated. The bot scans websites regularly to look for new links, pages, or content. Naturally, Googlebot is programmed not to crawl websites very fast since it’s important to avoid overloading them.

What Types of Googlebot Can You Encounter?

There are many different crawlers, each designed for different ways in which websites are crawled. For example, some crawlers check ad quality, like AdsBot and AdSense, or those that check apps like Mobile Apps Android. Each crawler has a different string of text that identifies it, called Googlebot user agent. When it comes to Google, here are the most important crawlers you should remember:

- Googlebot (desktop),

- Googlebot (mobile),

- Googlebot Video,

- Googlebot News,

- Googlebot Images.

How to Get Googlebot to Crawl to Your Site?

To ensure the bot can properly index your website, you need to check its crawlability – if the site is available to crawlers, you can be sure that Googlebot will crawl it often. Crawlability is essentially the accessibility of your website to bots. The easier it is for Googlebot to go through your pages, the better your rankings on result pages will be.

You can do a lot in terms of technical SEO to improve your site’s crawlability – but you can also unintentionally make mistakes that will get pages blocked from Googlebot, and that’s surely something you want to avoid. We must mention, though, that if you want some pages not to be indexed, you can block them from a crawler. Still, it’s crucial to make sure your most important pages are properly indexed.

One of the common questions regarding crawling is how to get Googlebot to crawl your site or how to crawl it faster. Your site may not be crawled fast enough if it doesn’t have enough authority – for example, when the site is new, so there aren’t many links pointing to it. If this is the case, Google doesn’t consider your website important, so it won’t waste time crawling it.

Other reasons include too many technical errors or the site being too slow. Additionally, if there is too much content that needs to be crawled, the bot will work slower. Of course, all these cases don’t work well in your favor. This means you’ll need to find ways to encourage the bot, and you do this by optimizing your website.

How Do You Optimize Your Site for Googlebot?

It’s important to note that Googlebot optimization isn’t the same as search engine optimization – although there is a lot of overlapping. However, search engine optimization is more focused on the queries, while Googlebot optimization focuses on how crawlers can better access your website. Let’s take a look at some of the things you can do to help a bot access your content.

Don’t Make Your Content Too Complicated in Technical Terms

The goal is to make your content visible in a text browser – if you complicate things too much, you may prevent this from happening. Googlebot finds it difficult to crawl sites that use Ajax and JavaScript (in some cases). The same goes for frames, Flash, DHTML, and even HTML.

Use robots.txt. To Your Advantage

We all know that robots.txt. is a part of every good SEO strategy, but it’s also vital for directing Googlebot to pages you want it to crawl. The bot will crawl every page on your site if there aren’t any directions where it should and should not go. That’s why you should modify robots.txt. accordingly – so that Googlebot spends less time on irrelevant parts of your site. That way, it will spend more time on important pages.

Create a Sitemap

Sitemap is, as the name suggests, a roadmap of your website. It’s a file on your server where you can list all the URLs you want. This roadmap helps Googlebot find all the relevant pages and crawl and index them faster.

Always Create Fresh Content and Keep It High-Quality

Fresh content is crucial for drawing the crawler’s attention. Whether it’s new blogs or updates on the old content, it will bring the crawler more frequently to your site, increasing your performance. However, it’s important to mention that content has to be of good quality – otherwise, it will likely have a negative impact. Googlebot is programmed to understand information, and if yours isn’t relevant and well-structured, it won’t perform well.

Improve Your Site’s Loading Speed

Googlebot has a certain crawling budget – meaning it will spend a certain amount of time on your website. If your loading speed is slow, it won’t get a chance to crawl many pages, so improving loading speed increases the number of pages that will be crawled in one visit.

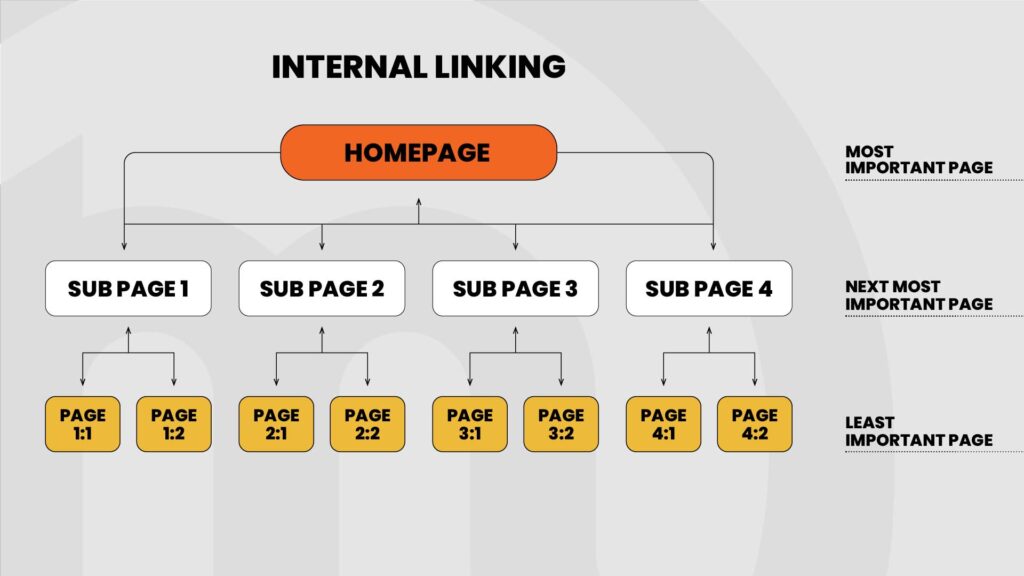

Use Internal Linking

Internal linking by creating anchor text links will direct the crawler through your website. A well-organized system of links will make crawling much more efficient. Still, it’s crucial to mention that linked pages must be relevant to your content and that they can’t be otherwise accessed from the current page.

How to Limit Crawling and Indexing of Your Website?

You can limit crawling in several ways. First, we’ve mentioned robot.txt. There is also an option to mark your page as nofollow – whether as a link attribute or with a meta robots tag. Lastly, you can change the speed of crawling by using a tool in your Google Search Console called Crawl Rate Control. Now, when it comes to indexing, there are a few things you can do:

- Delete your content – That way, no one can find it, not just Googlebot.

- Use a URL removal tool – You’ll temporarily hide a page, and while it will be crawled, it won’t be indexed.

- Restrict access – You can put a password on a page or an entire website, and it will prevent bots from crawling it.

- Use a noindex meta tag – This tells Googlebot that the page shouldn’t be indexed, but it doesn’t stop it from crawling the website.

How Do You Know When Googlebot Visits Your Site and How to Verify It?

You can easily find out how often Googlebot visits your site if you check the log files or take a look at the Crawl section of your Google Search Console. Still, it’s important to be sure that it’s really Googlebot that’s visiting your site – there are many malicious bots out there that can pretend to be the real thing. So, how do you know if it’s really Googlebot? Luckily, Google has made this easy – there is a list of public IPs that can verify the requests are really from Googlebot. You simply compare them to data in your logs.

Want to Improve Your Website’s Visibility? Then You Need an SEO Marketing Agency – Made Online Is Here for You

Improving your website and its visibility is a continuous process, and it’s one that’s usually better left to professionals. If you wish for better rankings on SERPs, you should enlist the help of a professional SEO agency like our Made Online. Let us help you with everything SEO-related – our SEO services include a personal approach to each client, so you can be sure they’ll be tailored to your individual needs. Contact us today, and let’s embark on this digital journey to the first page of Google together!